AI Videos Made Easy: Gaming GPUs with Just 6GB VRAM

Lvmin Zhang at GitHub This week, in partnership with Maneesh Agrawala from Stanford University, they have presented FramePack. This new system provides an effective way to implement video diffusion through the use of a consistent time frame, which enhances efficiency and allows for extended high-resolution clips. They demonstrated that a 13 billion-parameter model designed around the FramePack framework can produce a 60-second segment utilizing only 6 gigabytes of video memory.

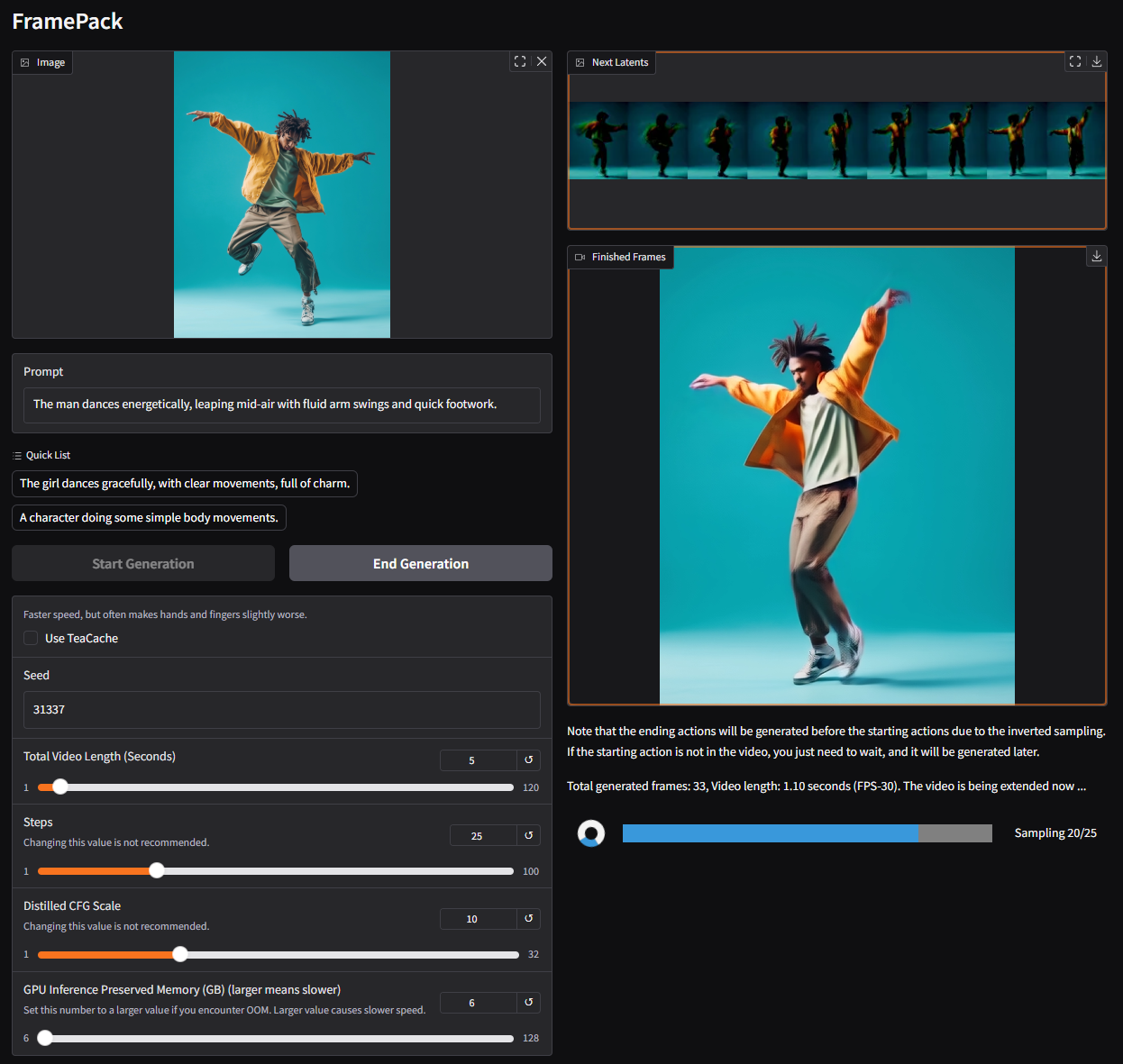

FramePack is a neural network design that employs multi-stage optimization methods to facilitate localized AI-driven video creation. As mentioned when this was written, the FramePack graphical user interface operates with an underlying custom Hunyuan-based model; however, the associated research document indicates that current pre-trained models can also be adjusted utilizing FramePack technology.

Standard diffusion models work by using sequentially created noisy frames to forecast the subsequent somewhat cleaner frame. This forecasting capability depends on what’s termed the temporal context length, which expands as videos become larger. Conventional video diffusion techniques require substantial VRAM; typically, having around 12 gigabytes serves as an initial benchmark. While smaller amounts of memory might be used, doing so often leads to limitations such as reduced clip lengths, diminished output quality, and extended computational durations.

Introducing FramePack: an innovative design that condenses input frames according to their significance into a consistent-sized context window, significantly cutting down on GPU memory usage. Every frame needs compression so they all meet a specified maximum limit for the context size. According to the creators, this process has comparable computational demands to those of image diffusion techniques.

Alongside methods designed to prevent "drift," wherein image quality can diminish over time as videos get longer, FramePack enables extended video creation without substantially sacrificing clarity. Currently, FramePack demands an NVIDIA RTX 30/40/50 series graphics card capable of handling both FP16 and BF16 data types. Compatibility with Turing architecture or earlier models hasn’t been confirmed, nor does it explicitly state whether AMD or Intel processors work. Additionally, Linux operating systems are included within the list of compatible environments.

Aside from the RTX 3050 4GB , most contemporary (RTX) graphics cards fulfill or surpass the 6GB requirement. Regarding performance, a RTX 4090 Can output up to 0.6 frames per second (enhanced using teacache); thus, performance may differ based on your GPU. Regardless, every frame will appear immediately once rendered, offering instant visual confirmation.

The current model probably caps at 30 frames per second, which could restrict many users. However, rather than depending on expensive external services, FramePack is working towards making AI-driven video creation more affordable for typical consumers. Whether you’re into content production or just looking for fun ways to create GIFs and memes, this tool offers an engaging experience. Personally, I plan to try it out during my spare moments.

If you enjoyed this article, click the +Follow button at the top of the page to stay updated with similar stories from MSN.

Posting Komentar untuk "AI Videos Made Easy: Gaming GPUs with Just 6GB VRAM"

Please Leave a wise comment, Thank you